Not every AI agent is worth your trust. Some save hours, others create new headaches. The difference isn’t just technical – it’s practical. A “good” agent improves real workflows. A “bad” agent adds risk, confusion, or more workload.

If you’re evaluating AI agents for your business, here’s my suggestion on how you can identify a productive and effective transformation from a time wasting of money and effort.

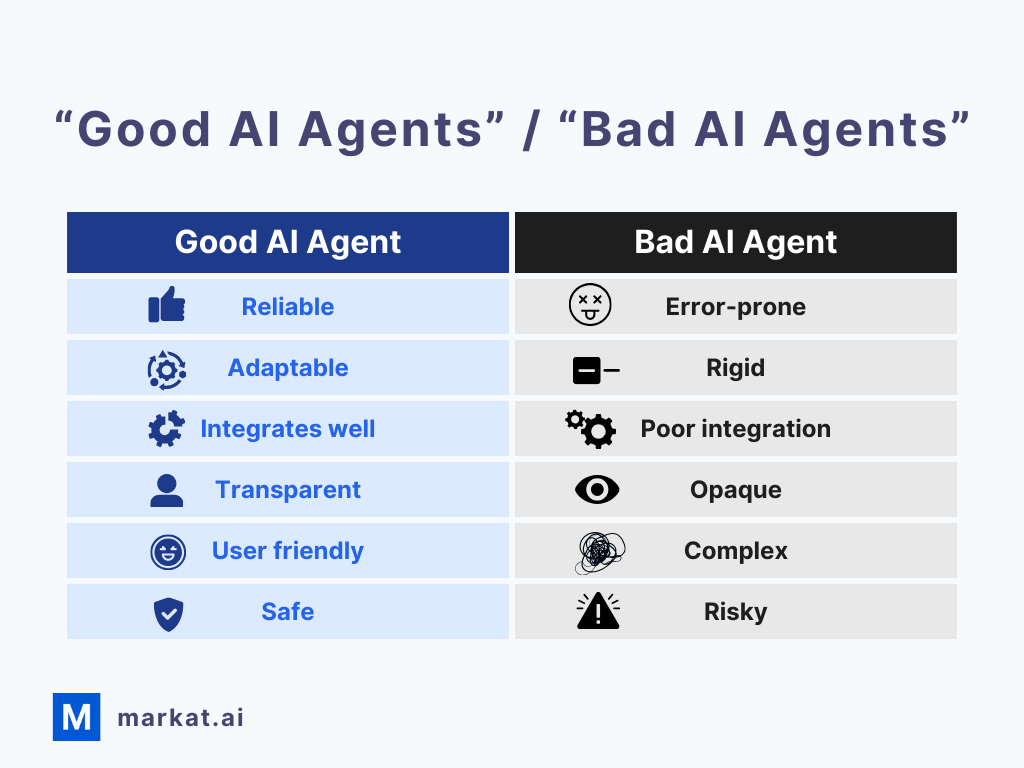

Good vs Bad at a Glance

| Factor | Good AI Agent | Bad AI Agent |

|---|---|---|

| Reliability | Accurate, dependable | Error-prone |

| Adaptability | Learns, improves | Static, rigid |

| Integration | Smooth fit | Doesn’t connect |

| Transparency | Clear enough to trust | Opaque, black-box |

| Usability | Easy to adopt | Confusing, clunky |

| Safety | Bias-aware, compliant | Risky outputs |

Every strong agent shares the traits on the left. Every weak one shows the opposite. The difference is obvious once you know what to look for.

How to Spot the Difference in Practice

A good agent isn’t about flashy features. It’s about how it performs in real workflows.

- Reliability: Accuracy isn’t optional. An occupational therapist using an agent to draft patient reports needs absolute precision because any errors cost trust and time.

- Adaptability: Agents should improve with context and feedback. A rigid one quickly becomes irrelevant.

- Integration: If a plumber needs to file a leak detection report for an insurance claim, the agent must slot into existing reporting systems. If it doesn’t connect, it’s just extra work.

- Transparency: You should be able to see why it made a decision. If you can’t, it’s a black box you can’t trust.

- Usability: If your team avoids it after the first test, it’s not simple enough.

- Safety: Biased or unsafe outputs aren’t just annoying, they’re risky for compliance and reputation.

The biggest red flag: an agent that adds confusion instead of removing it.

How to Evaluate an AI Agent

Most businesses go wrong by relying on a demo. A demo shows potential, not reality.

Here’s how to test properly:

- Define success upfront: Accuracy, time saved, or customer satisfaction. Pick the metrics that matter.

- Test in your workflow: Put it against real cases, not canned examples.

- Listen to your team: If they find it confusing or unreliable, adoption will fail.

- Monitor continuously: Agents aren’t “set and forget.” Without oversight, even good ones drift into errors.

Why Feedback Loops Matter

AI agents either get better with use or worse with neglect. Without a feedback system, even strong agents degrade over time.

That’s why we’re building Markat.ai as a platform to connect quality AI agents and tools with business – a space where developers can refine their solutions with structured feedback, and businesses can validate performance before committing.

Checklist Before You Commit

Use this quick test list before rollout. If your agent fails more than one of these, it’s not ready:

✓ Reliable outputs in your workflow

✓ Transparent reasoning you can trust

✓ Integration with existing tools

✓ Safe, compliant, bias-aware

✓ Simple enough for real users

✓ Clear ROI (time saved, accuracy, or satisfaction)

FAQ

What defines a good AI agent?

One that’s reliable, adaptable, transparent, safe, and easy to use in your actual workflow.

What are common mistakes in bad AI agents?

Hallucinations, black-box behavior, poor integration, bias, and no learning loop.

Can a bad agent improve?

Yes a bad agent can improve with retraining, feedback, and integration fixes. But don’t assume it will improve on its own.

My Final Words

A bad AI agent doesn’t just waste money – it damages trust. A good one feels almost invisible. It just works, fits into your workflow, and makes life easier.

The difference comes down to evaluation. Don’t stop at demos or feature lists. Test in your real environment, measure outcomes that matter, and trust the results.

At Markat.ai, we’re building a community where businesses can validate AI agents and tools in real environments, and where developers can refine their solutions through real feedback. Because in the end, the only AI that matters is the one that actually works for you.